Designing and BuildingYoutube

Introduction

YouTube is the most popular video streaming website worldwide. With over 2 billion monthly active users the scale at which youtube operate is massive.

Functional Requirements

- Upload Youtube

- Upload Metadata and Thumbnail

- Like , Subscribe and Comment on Videos

- Smooth Video Streaming

Non Functional Requirement

- High Availability

- Eventual Consistency

- Average Latency 200ms

- Highly reliable and no uploaded should be lost

Capacity Estimation

- Assuming YT has 5 million daily active users

- Users watch 5 videos per day

- Out of those 5 million users 200,000 users upload 1 video per day

- Average size of video 200MB

- Total disk space 200,000 x 200MB = 40TB

High Level Design

- User — Phone, Desktop, Tablets

- CDN — Video streamed from CDN

- Microservices — Everything else go to API like Feed Generation, User Account, Metadata etc

Database Schema

Video Stored in Object Store or HDFS. All the rest information can be stored in NoSQL database like Cassandra , Key will be VideoID and Value will be all information related to Video. For NoSQL database, we need an additional table to store the relationships between Users and Videos. Let’s call this table ‘UserVideo’. Similar subscribers can be stored in ‘subscriber’. For both of these tables, we can use a wide-column datastore like Cassandra. For the ‘UserVideo’ table, the ‘key’ would be ‘UserID’, and the ‘value’ would be the list of ‘VideoID’s’ the user owns, stored in different columns. We will have a similar scheme for the ‘Subscriber’ table.

Note this is not the best schema but its a good start

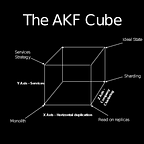

Scaling the services

Sharding by UserID

Partitioning by UserID : Partitioning based on ‘UserID’ so that we can keep all photos of a user on the same shard.

Partitoning by VideoID

- Node Exhaustion — Resource Exhaustion Memory Full, CPU etc

- Shard Exhaustion — Blow out shard

- Shard Allocation Imbalance — Sharding Data Imbalance

- Hot Key — If by User ID Celebrities will have more views like justin bieber will rip youtube views so sharding will cause problem there

This blog from HighScalablity is the best resource

Upload Service

- Pre Signed URL for safety

- Each Video get Unique ID at Time of upload given a unique ID

- Metadata gets stored in Database

- Video gets store in Object Storage like S3

- Batch Job — Runs in background for each video to perform task like thumbnail generation, metadata, video transcripts , encoding

- Transcoding servers — Process of converting video formats to other formats which are easier to stream based on device (MPEG, HLS etc) .

- Transcoding Enables video streams at different resolutions

- Video Compression- VP9 , HEVC(Compression and Decompression algorithms smaller size same quality)

Video Streaming

Protocols(Different Streaming Protocol requires different video encoding)

- MPEG(moving picture expert group)

Video Streamed from CDN directly. When you record a video it gets a certain format especially bitrate formats.

BitRate Rate at which bits are transferred from one location to another. High Bit rate means high quality.

Why Convert ?

- Raw Video Consumes large amount of storage space

- Different format support across multiple devices

- Good idea to deliver high quality video to high internet users

- To ensure video plays continuously we need to ensure we can switch based on availability of internet

- Compression Lossy and Lossless — In Lossy there is loss of data converting one format to another

Transcoding Process

Transcoding is computationally intensive, different requirements based on the user like watermark, tags, thumbnails. Facebook uses DAG’S .

Not So good Approach

- Monolith Script — Hard to maintain due to different formats and changing requirements. Time Consuming (Batch Oriented Sequential Pipeline — File uploaded , Stored, trigger processing and once complete available for sharing) BATCH IS INVERSELY PROPORTIONAL TO LATENCY

Adaptive Streaming

- Delivery of content based on the network bandwidth and the device type of the user

SVE

- Overlaps uploading and processing of video

- Parallelize video processing by converting videos to small chunks and process in parallel

- Parallelize storing of uploaded videos

- SVE converts video to GOP(group of pictures) Each picture separately encoded without referencing earlier GOP and during playback they are played separately

- Converted video sent to preprocessor rather then to storage.

- Part of submitting jobs to the scheduler, the preprocessor dynamically generates the DAG of processing tasks to be used for the video.

- Workers pull task from queue.

Preprocessor splits the video

DAG scheduler puts in the queue and splits video into multiple tasks

Resource Manager works on managing efficiency of resource allocation

Task worker runs the task, different task workers runs different task

- Initial Video split into Audio, Video and metadata

- Audio and Video split based on number of bitrates , encoding happens in parallel, output segment are joined for storage

- SVE is a parallel processing framework that specializes data ingestion, parallel processing, the programming interface, fault tolerance, and overload control for videos at massive scale.

Error Handling

- Upload Error — Retry

- Split Video Error (if cant be done properly, it will be done on server side , entire video passed)

- Transcoding Error — Retry

- Preprocessor Error — Regenerate DAG

- DAG scheduler Down — Reschedule

- Resource Manager Queue — Use Replica

- Task Worker Down — Retry

- API Server Down — Direct to other server

- Metadata Cache Server down — Replicated so you can fetch other nodes

- Metadata DB server down — Promote slave to master or use another slave

Note this post is inspired from the book System Design Interview (Amazon) and Educative